My opinion on Github Copilot

Quite a few of you asked me for my opinion on Github Copilot and I was waiting to have access to the technical preview before writing on the subject. I was able to test the tool in different situations and, although it is a technical preview, the result is rather astonishing.

Before you start

Before talking about how Copilot works I think it's important to talk about a few issues related to the general concept of the tool.

The ethical and legal problem

The general principle of this AI poses a legal and ethical problem because Github used the Open Source code available on its platform. The problem is that the code available on the platform is not necessarily free to use and one can wonder if Github really has the right to exploit the code of the repositories hosted on its platform as part of such a project ( especially if copilot then becomes a commercial product).

This also poses a problem on the user side who might be suggested pieces of code that would be licensed without knowing it.

Telemetry

If we read the frequently asked questions, Github specifies that part of your code is sent to their server to be used as context for intelligence.

In order to generate suggestions, GitHub Copilot transmits part of the file you are editing to the service

The problem is, if you're working on a file that contains sensitive data, that data could end up on Github's servers and could be exploited by someone with bad intentions. It would be interesting to see if in the more or less near future a version which can work locally will be provided (as for tabnine for example).

Bad code

Finally the last problem with this wizard is the possibility of obtaining bad code or code that contains security vulnerabilities.

$ pdo = new PDO ();

$ pdo-> setDSN ("mysql: host = localhost; dbname = test");

$ pdo-> setUser ("root");

$ pdo-> setPass ("root");

// Find the article with the ID from the URL

$ pdo-> exec ('SELECT * FROM article WHERE id = "'. $ _ GET ('id'). '"'); // Line generated by copilotAlso, the assistant must be able to identify the version of the language you are using depending on the context (which is not necessarily obvious). And in many languages there are big syntax differences between versions which can cause problems when Copilot offers suggestions. It will also be problematic when a new version of a language becomes available because artificial intelligence will not necessarily be trained correctly and will offer few relevant suggestions (PHP 8 for example).

But in real life, does it work?

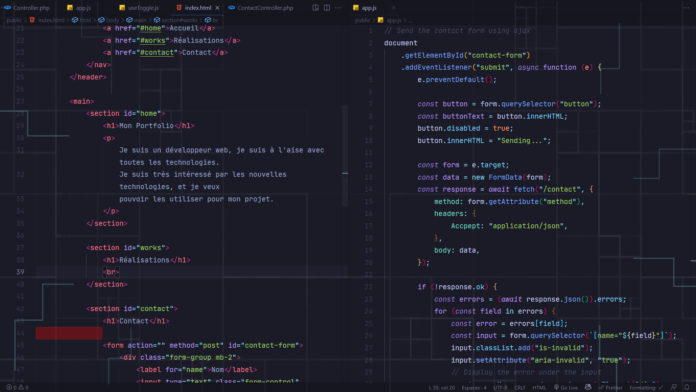

Apart from all these considerations one can wonder if the concept itself is functional. And to be honest I was rather amazed by the ability of AI to come up with relevant suggestions in a project (as long as it gave it enough context).

For example, if we are in a JavaScript function that uses an ES2015 + syntax, the snippets that are offered will be automatically written with modern standards. On the other hand, when we start working on a file, the assistant will tend to offer us slightly older suggestions (jQuery code, XMLHttpRequest and var).

This context can be provided in different ways but a good solution is often to put a comment that indicates the intention behind our code, the AI will then take care of translating your intention into code and on this point it is rather impressive.

On the other hand, it can lead to a bad practice where one ends up writing a comment to describe exactly the line of code which follows. On the other hand, this is very effective when creating well-documented functions and it can even encourage writing better comments by offering suggestions.

On the other hand, you will have to be careful to check the code that is proposed and not necessarily blindly trust the wizard. This joins the point indicated above, artificial intelligence will tend to write code that contains small errors and our role will then be to check the code that is proposed. This is where the concept poses a problem for me personally because it transforms your position as a developer into a simple code reviewer (which can be counterproductive because it is quite easy to miss small errors).

Personally I find Copilot to be an interesting tool when it offers relatively short suggestions that are easy to verify. I would also like to have a local AI that is able to adapt to my own way of coding with project logic. To see what it looks like in the future.